This guide explains how you can install and use KVM for creating and running virtual machines on a CentOS 6.4 server. I will show how to create image-based virtual machines and also virtual machines that use a logical volume (LVM). KVM is short for Kernel-based Virtual Machine and makes use of hardware virtualization, i.e., you need a CPU that supports hardware virtualization, e.g. Intel VT or AMD-V.

I had SELinux disabled on my CentOS 6.4 system. I didn't test with SELinux on; it might work, but if not, you better switch off SELinux as well:

... and reboot:

reboot

We also need a desktop system where we install virt-manager so that we can connect to the graphical console of the virtual machines that we install. I'm using a Fedora 17 desktop here.

2 Installing KVM

CentOS 6.4 KVM Host:

First check if your CPU supports hardware virtualization - if this is the case, the command

egrep '(vmx|svm)' --color=always /proc/cpuinfo

should display something, e.g. like this:

[root@server1 ~]# egrep '(vmx|svm)' --color=always /proc/cpuinfo

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall

nx mmxext fxsr_opt rdtscp lm 3dnowext 3dnow pni cx16 lahf_lm cmp_legacy svm extapic cr8_legacy misalignsse

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall

nx mmxext fxsr_opt rdtscp lm 3dnowext 3dnow pni cx16 lahf_lm cmp_legacy svm extapic cr8_legacy misalignsse

[root@server1 ~]#

If nothing is displayed, then your processor doesn't support hardware virtualization, and you must stop here.

Now we import the GPG keys for software packages:

rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY*

To install KVM and virtinst (a tool to create virtual machines), we run

yum install kvm libvirt python-virtinst qemu-kvm

Then start the libvirt daemon:

/etc/init.d/libvirtd start

To check if KVM has successfully been installed, run

virsh -c qemu:///system list

It should display something like this:

[root@server1 ~]# virsh -c qemu:///system list

Id Name State

----------------------------------

[root@server1 ~]#

If it displays an error instead, then something went wrong.

Next we need to set up a network bridge on our server so that our virtual machines can be accessed from other hosts as if they were physical systems in the network.

To do this, we install the package bridge-utils...

yum install bridge-utils

... and configure a bridge. Create the file /etc/sysconfig/network-scripts/ifcfg-br0 (please use the IPADDR, PREFIX, GATEWAY, DNS1 and DNS2 values from the/etc/sysconfig/network-scripts/ifcfg-eth0 file); make sure you use TYPE=Bridge, not TYPE=Ethernet:

vi /etc/sysconfig/network-scripts/ifcfg-br0

DEVICE="br0"

NM_CONTROLLED="yes"

ONBOOT=yes

TYPE=Bridge

BOOTPROTO=none

IPADDR=192.168.0.100

PREFIX=24

GATEWAY=192.168.0.1

DNS1=8.8.8.8

DNS2=8.8.4.4

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME="System br0"

|

DEVICE="eth0"

#BOOTPROTO=none

NM_CONTROLLED="yes"

ONBOOT=yes

TYPE="Ethernet"

UUID="73cb0b12-1f42-49b0-ad69-731e888276ff"

HWADDR=00:1E:90:F3:F0:02

#IPADDR=192.168.0.100

#PREFIX=24

#GATEWAY=192.168.0.1

#DNS1=8.8.8.8

#DNS2=8.8.4.4

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME="System eth0"

BRIDGE=br0

|

Restart the network...

/etc/init.d/network restart

... and run

ifconfig

It should now show the network bridge (br0):

[root@server1 ~]# ifconfig

br0 Link encap:Ethernet HWaddr 00:1E:90:F3:F0:02

inet addr:192.168.0.100 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::21e:90ff:fef3:f002/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:27 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:460 (460.0 b) TX bytes:2298 (2.2 KiB)

eth0 Link encap:Ethernet HWaddr 00:1E:90:F3:F0:02

inet6 addr: fe80::21e:90ff:fef3:f002/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:18455 errors:0 dropped:0 overruns:0 frame:0

TX packets:11861 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:26163057 (24.9 MiB) TX bytes:1100370 (1.0 MiB)

Interrupt:25 Base address:0xe000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:5 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2456 (2.3 KiB) TX bytes:2456 (2.3 KiB)

virbr0 Link encap:Ethernet HWaddr 52:54:00:AC:AC:8F

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

[root@server1 ~]#

3 Installing virt-viewer Or virt-manager On Your Fedora 17 Desktop

Fedora 17 Desktop:

We need a means of connecting to the graphical console of our guests - we can use virt-manager for this. I'm assuming that you're using a Fedora 17 desktop.

Become root...

su

... and run...

yum install virt-manager libvirt qemu-system-x86 openssh-askpass

... to install virt-manager.

(If you're using an Ubuntu 12.04 desktop, you can install virt-manager as follows:

sudo apt-get install virt-manager

)

4 Creating A Debian Squeeze Guest (Image-Based) From The Command Line

CentOs 6.4 KVM Host:

Now let's go back to our CentOS 6.4 KVM host.

Take a look at

man virt-install

to learn how to use virt-install.

We will create our image-based virtual machines in the directory /var/lib/libvirt/images/ which was created automatically when we installed KVM in chapter two.

To create a Debian Squeeze guest (in bridging mode) with the name vm10, 512MB of RAM, two virtual CPUs, and the disk image /var/lib/libvirt/images/vm10.img (with a size of 12GB), insert the Debian Squeeze Netinstall CD into the CD drive and run

virt-install --connect qemu:///system -n vm10 -r 512 --vcpus=2 --disk path=/var/lib/libvirt/images/vm10.img,size=12 -c /dev/cdrom --vnc --noautoconsole --os-type linux --os-variant debiansqueeze --accelerate --network=bridge:br0 --hvm

Of course, you can also create an ISO image of the Debian Squeeze Netinstall CD (please create it in the /var/lib/libvirt/images/ directory because later on I will show how to create virtual machines through virt-manager from your Fedora desktop, and virt-manager will look for ISO images in the /var/lib/libvirt/images/ directory)...

dd if=/dev/cdrom of=/var/lib/libvirt/images/debian-6.0.5-amd64-netinst.iso

... and use the ISO image in the virt-install command:

virt-install --connect qemu:///system -n vm10 -r 512 --vcpus=2 --disk path=/var/lib/libvirt/images/vm10.img,size=12 -c /var/lib/libvirt/images/debian-6.0.5-amd64-netinst.iso --vnc --noautoconsole --os-type linux --os-variant debiansqueeze --accelerate --network=bridge:br0 --hvm

The output is as follows:

[root@server1 ~]# virt-install --connect qemu:///system -n vm10 -r 512 --vcpus=2 --disk path=/var/lib/libvirt/images/vm10.img,size=12 -c /var/lib/libvirt/images/debian-6.0.5-amd64-netinst.iso --vnc --noautoconsole --os-type linux --os-variant debiansqueeze --accelerate --network=bridge:br0 --hvm

Starting install...

Allocating 'vm10.img' | 12 GB 00:00

Creating domain... | 0 B 00:00

Domain installation still in progress. You can reconnect to

the console to complete the installation process.

[root@server1 ~]#

5 Connecting To The Guest

The KVM guest will now boot from the Debian Squeeze Netinstall CD and start the Debian installer - that's why we need to connect to the graphical console of the guest. You can do this with virt-manager on the Fedora 17 desktop.

Go to Applications > System Tools > Virtual Machine Manager to start virt-manager:

Type in your password:

When you start virt-manager for the first time, you will most likely see the message Unable to open a connection to the libvirt management daemon. You can ignore this because we don't want to connect to the local libvirt daemon, but to the one on our CentOS 6.4 KVM host. Click on Close and go to File > Add Connection... to connect to our CentOS 6.4 KVM host:

Select QEMU/KVM as Hypervisor, then check Connect to remote host, select SSH in the Method drop-down menu, type in root as the Username and the hostname (server1.example.com) or IP address (192.168.0.100) of the CentOS 6.4 KVM host in the Hostname field. Then click on Connect:

If this is the first connection to the remote KVM server, you must type in yes and click on OK:

Afterwards type in the root password of the CentOS 6.4 KVM host:

You should see vm10 as running. Mark that guest and click on the Open button to open the graphical console of the guest:

Type in the root password of the KVM host again:

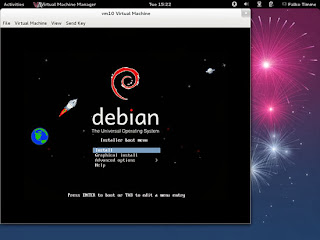

You should now be connected to the graphical console of the guest and see the Debian installer:

Now install Debian as you would normally do on a physical system. Please note that at the end of the installation, the Debian guest needs a reboot. The guest will then stop, so you need to start it again, either with virt-manager or like this on our CentOS 6.4 KVM host command line:

CentOS 6.4 KVM Host:

virsh --connect qemu:///system

start vm10

quit

Afterwards, you can connect to the guest again with virt-manager and configure the guest. If you install OpenSSH (package

openssh-server) in the guest, you can connect to it with an SSH client (such as

PuTTY).

6 Creating A Debian Squeeze Guest (Image-Based) From The Desktop With virt-manager

Instead of creating a virtual machine from the command line (as shown in chapter 4), you can as well create it from the Fedora desktop using virt-manager (of course, the virtual machine will be created on the CentOS 6.4 KVM host - in case you ask yourself if virt-manager is able to create virtual machines on remote systems).

To do this, click on the following button:

The New VM dialogue comes up. Fill in a name for the VM (e.g. vm11), select Local install media (ISO image or CDROM), and click on Forward:

Next select Linux in the OS type drop-down menu and Debian Squeeze in the Version drop-down menu, then check Use ISO image and click on the Browse... button:

Select the debian-6.0.2.1-amd64-netinst.iso image that you created in chapter 4 and click on Choose Volume:

Now click on Forward:

Assign memory and the number of CPUs to the virtual machine and click on Forward:

Now we come to the storage. Check Enable storage for this virtual machine, select Create a disk image on the computer's hard drive, specify the size of the hard drive (e.g. 12GB), and check Allocate entire disk now. Then click on Forward:

Now we come to the last step of the New VM dialogue. Go to the Advanced options section. Select Host device vnet0 (Bridge 'br0'); that is the name of the bridge which we created in chapter 2. Click on Finish afterwards:

The disk image for the VM is now being created:

Afterwards, the VM will start. Type in the root password of the CentOS 6.4 KVM host:

You should now be connected to the graphical console of the guest and see the Debian installer:

Now install Debian as you would normally do on a physical system.

7 Managing A KVM Guest From The Command Line

KVM guests can be managed through virsh, the "virtual shell". To connect to the virtual shell, run

virsh --connect qemu:///system

This is how the virtual shell looks:

[root@server1 ~]# virsh --connect qemu:///system

Welcome to virsh, the virtualization interactive terminal.

Type: 'help' for help with commands

'quit' to quit

virsh #

You can now type in commands on the virtual shell to manage your guests. Run

help

to get a list of available commands:

virsh # help

Grouped commands:

Domain Management (help keyword 'domain'):

attach-device attach device from an XML file

attach-disk attach disk device

attach-interface attach network interface

autostart autostart a domain

blkiotune Get or set blkio parameters

blockpull Populate a disk from its backing image.

blockjob Manage active block operations.

console connect to the guest console

cpu-baseline compute baseline CPU

cpu-compare compare host CPU with a CPU described by an XML file

create create a domain from an XML file

define define (but don't start) a domain from an XML file

destroy destroy (stop) a domain

detach-device detach device from an XML file

detach-disk detach disk device

detach-interface detach network interface

domid convert a domain name or UUID to domain id

domjobabort abort active domain job

domjobinfo domain job information

domname convert a domain id or UUID to domain name

domuuid convert a domain name or id to domain UUID

domxml-from-native Convert native config to domain XML

domxml-to-native Convert domain XML to native config

dump dump the core of a domain to a file for analysis

dumpxml domain information in XML

edit edit XML configuration for a domain

inject-nmi Inject NMI to the guest

send-key Send keycodes to the guest

managedsave managed save of a domain state

managedsave-remove Remove managed save of a domain

maxvcpus connection vcpu maximum

memtune Get or set memory parameters

migrate migrate domain to another host

migrate-setmaxdowntime set maximum tolerable downtime

migrate-setspeed Set the maximum migration bandwidth

reboot reboot a domain

restore restore a domain from a saved state in a file

resume resume a domain

save save a domain state to a file

save-image-define redefine the XML for a domain's saved state file

save-image-dumpxml saved state domain information in XML

save-image-edit edit XML for a domain's saved state file

schedinfo show/set scheduler parameters

screenshot take a screenshot of a current domain console and store it into a file

setmaxmem change maximum memory limit

setmem change memory allocation

setvcpus change number of virtual CPUs

shutdown gracefully shutdown a domain

start start a (previously defined) inactive domain

suspend suspend a domain

ttyconsole tty console

undefine undefine an inactive domain

update-device update device from an XML file

vcpucount domain vcpu counts

vcpuinfo detailed domain vcpu information

vcpupin control or query domain vcpu affinity

version show version

vncdisplay vnc display

Domain Monitoring (help keyword 'monitor'):

domblkinfo domain block device size information

domblklist list all domain blocks

domblkstat get device block stats for a domain

domcontrol domain control interface state

domifstat get network interface stats for a domain

dominfo domain information

dommemstat get memory statistics for a domain

domstate domain state

list list domains

Host and Hypervisor (help keyword 'host'):

capabilities capabilities

connect (re)connect to hypervisor

freecell NUMA free memory

hostname print the hypervisor hostname

nodecpustats Prints cpu stats of the node.

nodeinfo node information

nodememstats Prints memory stats of the node.

qemu-attach QEMU Attach

qemu-monitor-command QEMU Monitor Command

sysinfo print the hypervisor sysinfo

uri print the hypervisor canonical URI

Interface (help keyword 'interface'):

iface-begin create a snapshot of current interfaces settings, which can be later commited (iface-commit) or restored (iface-rollback)

iface-commit commit changes made since iface-begin and free restore point

iface-define define (but don't start) a physical host interface from an XML file

iface-destroy destroy a physical host interface (disable it / "if-down")

iface-dumpxml interface information in XML

iface-edit edit XML configuration for a physical host interface

iface-list list physical host interfaces

iface-mac convert an interface name to interface MAC address

iface-name convert an interface MAC address to interface name

iface-rollback rollback to previous saved configuration created via iface-begin

iface-start start a physical host interface (enable it / "if-up")

iface-undefine undefine a physical host interface (remove it from configuration)

Network Filter (help keyword 'filter'):

nwfilter-define define or update a network filter from an XML file

nwfilter-dumpxml network filter information in XML

nwfilter-edit edit XML configuration for a network filter

nwfilter-list list network filters

nwfilter-undefine undefine a network filter

Networking (help keyword 'network'):

net-autostart autostart a network

net-create create a network from an XML file

net-define define (but don't start) a network from an XML file

net-destroy destroy (stop) a network

net-dumpxml network information in XML

net-edit edit XML configuration for a network

net-info network information

net-list list networks

net-name convert a network UUID to network name

net-start start a (previously defined) inactive network

net-undefine undefine an inactive network

net-uuid convert a network name to network UUID

Node Device (help keyword 'nodedev'):

nodedev-create create a device defined by an XML file on the node

nodedev-destroy destroy (stop) a device on the node

nodedev-dettach dettach node device from its device driver

nodedev-dumpxml node device details in XML

nodedev-list enumerate devices on this host

nodedev-reattach reattach node device to its device driver

nodedev-reset reset node device

Secret (help keyword 'secret'):

secret-define define or modify a secret from an XML file

secret-dumpxml secret attributes in XML

secret-get-value Output a secret value

secret-list list secrets

secret-set-value set a secret value

secret-undefine undefine a secret

Snapshot (help keyword 'snapshot'):

snapshot-create Create a snapshot from XML

snapshot-create-as Create a snapshot from a set of args

snapshot-current Get or set the current snapshot

snapshot-delete Delete a domain snapshot

snapshot-dumpxml Dump XML for a domain snapshot

snapshot-edit edit XML for a snapshot

snapshot-list List snapshots for a domain

snapshot-parent Get the name of the parent of a snapshot

snapshot-revert Revert a domain to a snapshot

Storage Pool (help keyword 'pool'):

find-storage-pool-sources-as find potential storage pool sources

find-storage-pool-sources discover potential storage pool sources

pool-autostart autostart a pool

pool-build build a pool

pool-create-as create a pool from a set of args

pool-create create a pool from an XML file

pool-define-as define a pool from a set of args

pool-define define (but don't start) a pool from an XML file

pool-delete delete a pool

pool-destroy destroy (stop) a pool

pool-dumpxml pool information in XML

pool-edit edit XML configuration for a storage pool

pool-info storage pool information

pool-list list pools

pool-name convert a pool UUID to pool name

pool-refresh refresh a pool

pool-start start a (previously defined) inactive pool

pool-undefine undefine an inactive pool

pool-uuid convert a pool name to pool UUID

Storage Volume (help keyword 'volume'):

vol-clone clone a volume.

vol-create-as create a volume from a set of args

vol-create create a vol from an XML file

vol-create-from create a vol, using another volume as input

vol-delete delete a vol

vol-download Download a volume to a file

vol-dumpxml vol information in XML

vol-info storage vol information

vol-key returns the volume key for a given volume name or path

vol-list list vols

vol-name returns the volume name for a given volume key or path

vol-path returns the volume path for a given volume name or key

vol-pool returns the storage pool for a given volume key or path

vol-upload upload a file into a volume

vol-wipe wipe a vol

Virsh itself (help keyword 'virsh'):

cd change the current directory

echo echo arguments

exit quit this interactive terminal

help print help

pwd print the current directory

quit quit this interactive terminal

virsh #

list

shows all running guests;

list --all

shows all guests, running and inactive:

virsh # list --all

Id Name State

----------------------------------

3 vm11 running

- vm10 shut off

virsh #

If you modify a guest's xml file (located in the /etc/libvirt/qemu/ directory), you must redefine the guest:

define /etc/libvirt/qemu/vm10.xml

Please note that whenever you modify the guest's xml file in /etc/libvirt/qemu/, you must run the define command again!

To start a stopped guest, run:

start vm10

To stop a guest, run

shutdown vm10

To immediately stop it (i.e., pull the power plug), run

destroy vm10

Suspend a guest:

suspend vm10

Resume a guest:

resume vm10

These are the most important commands.

Type

quit

to leave the virtual shell.

8 Creating An LVM-Based Guest From The Command Line

CentOS 6.4 KVM Host:

LVM-based guests have some advantages over image-based guests. They are not as heavy on hard disk IO, and they are easier to back up (using

LVM snapshots).

To use LVM-based guests, you need a volume group that has some free space that is not allocated to any logical volume. In this example, I use the volume group /dev/vg_server1 with a size of approx. 465GB...

vgdisplay

[root@server1 ~]# vgdisplay

--- Volume group ---

VG Name vg_server1

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size 465.28 GiB

PE Size 4.00 MiB

Total PE 119112

Alloc PE / Size 26500 / 103.52 GiB

Free PE / Size 92612 / 361.77 GiB

VG UUID ZXWn5k-oVkA-ibuC-ip8x-edLx-3DMw-UrYMXg

[root@server1 ~]#

... that contains the logical volumes /dev/vg_server1/LogVol00 with a size of approx. 100GB and /dev/vg_server1/LogVol01 (about 6GB) - the rest is not allocated and can be used for KVM guests:

lvdisplay

[root@server1 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/vg_server1/LogVol01

LV Name LogVol01

VG Name vg_server1

LV UUID uUpXY3-yGfZ-X6bc-3D1u-gB4E-CfKE-vDcNfw

LV Write Access read/write

LV Creation host, time server1.example.com, 2012-08-21 13:45:32 +0200

LV Status available

# open 1

LV Size 5.86 GiB

Current LE 1500

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/vg_server1/LogVol00

LV Name LogVol00

VG Name vg_server1

LV UUID FN1404-Aczo-9dfA-CnNI-IKn0-L2hW-Aix0rV

LV Write Access read/write

LV Creation host, time server1.example.com, 2012-08-21 13:45:33 +0200

LV Status available

# open 1

LV Size 97.66 GiB

Current LE 25000

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

[root@server1 ~]#

I will now create the virtual machine vm12 as an LVM-based guest. I want vm12 to have 20GB of disk space, so I create the logical volume /dev/vg_server1/vm12 with a size of 20GB:

lvcreate -L20G -n vm12 vg_server1

Afterwards, we use the virt-install command again to create the guest:

virt-install --connect qemu:///system -n vm12 -r 512 --vcpus=2 --disk path=/dev/vg_server1/vm12 -c /var/lib/libvirt/images/debian-6.0.5-amd64-netinst.iso --vnc --noautoconsole --os-type linux --os-variant debiansqueeze --accelerate --network=bridge:br0 --hvm

Please note that instead of --disk path=/var/lib/libvirt/images/vm12.img,size=20 I use --disk path=/dev/vg_server1/vm12, and I don't need to define the disk space anymore because the disk space is defined by the size of the logical volume vm12 (20GB).

Now follow chapter 5 to install that guest.

9 Converting Image-Based Guests To LVM-Based Guests

CentOS 6.4 KVM Host:

No let's assume we want to convert our image-based guest vm10 into an LVM-based guest. This is how we do it:

First make sure the guest is stopped:

virsh --connect qemu:///system

shutdown vm10

quit

Then create a logical volume (e.g. /dev/vg_server1/vm10) that has the same size as the image file. To find out the size of the image, type in ...

ls -l /var/lib/libvirt/images/

[root@server1 ~]# ls -l /var/lib/libvirt/images/

total 13819392

-rw-r--r-- 1 qemu qemu 177209344 May 12 22:41 debian-6.0.5-amd64-netinst.iso

-rw------- 1 root root 12884901888 Aug 21 15:37 vm10.img

-rw------- 1 qemu qemu 12884901888 Aug 21 15:51 vm11.img

[root@server1 ~]#

As you see, vm10.img has a size of exactly 12884901888 bytes. To create a logical volume of exactly the same size, we must specify -L 12884901888b (please don't forget the b at the end which tells lvcreate to use bytes - otherwise it would assume megabytes):

lvcreate -L 12884901888b -n vm10 vg_server1

Now we convert the image:

qemu-img convert /var/lib/libvirt/images/vm10.img -O raw /dev/vg_server1/vm10

Afterwards you can delete the disk image:

rm -f /var/lib/libvirt/images/vm10.img

Now we must open the guest's xml configuration file /etc/libvirt/qemu/vm10.xml...

vi /etc/libvirt/qemu/vm10.xml

... and change the following section...

[...]

<disk type='file' device='disk'>

<driver name='qemu' type='raw' cache='none'/>

<source file='/var/lib/libvirt/images/vm10.img'/>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</disk>

[...]

|

... so that it looks as follows:

[...]

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none'/>

<source dev='/dev/vg_server1/vm10'/>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</disk>

[...]

|

Afterwards we must redefine the guest:

virsh --connect qemu:///system

define /etc/libvirt/qemu/vm10.xml

Still on the virsh shell, we can start the guest...

start vm10

... and leave the virsh shell:

quit

10 Links

Source :

http://www.howtoforge.com/virtualization-with-kvm-on-a-centos-6.4-server